How ChatGPT keeps improving but is still far from perfect

[ad_1]

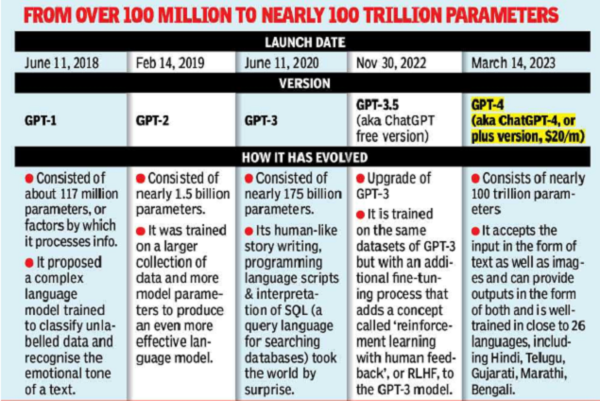

Within months of taking the internet by storm ChatGPT can boast of a ‘plus’ version that is said to be even better trained and smarter than the earlier iteration. That version, GPT-3. 5, had notched 1 million users within a week of its launch, shattering all previous user records. The technology behind the artificial intelligence chatbot is one that has generated widespread interest and mutiple tech firms, including Google, are working on similar products. But how do they work?

GPT in ChatGPT stands for Generative Pre-Trained Transformer. ChatGPT is built on a large language model (LLM) GPT-3. 5 that has been trained to respond to a prompt with a detailed response. It interacts in a conversational manner. The dialogue format allows ChatGPT to respond to follow-up questions, admit mistakes, challenge incorrect premises, and reject inappropriate requests. It is a deep learning-based autoregressive language model — meaning it can learn from its mistakes — that generates human-like text. The model’s critical deep learning methods include directed-learning and support-learning from human feedback. It builds its subsequent response using the user’s previously entered replies. It can be used by users to generate new ideas.

Not entirely error-free

➤ ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.

➤ ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

➤ The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimisation issues.

➤ Ideally, the ChatGPT model would ask clarifying questions when the user provided an ambiguous query. Instead, the ChatGPT-4 model is programmed to guess what the user intended.

➤ While the company has made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behaviour. It uses a tool (Moderation API) to warn or block certain types of unsafe content, but still expect it to have some false negatives and positives for now.

➤ Despite its capabilities,GPT-4 has similar limitations as earlier GPT models. Most importantly, it still is not fully reliable (it “hallucinates” facts and makes reasoning errors).

➤ GPT-4 may misinterpret common phrases. For instance, it may take the phrase ‘you can’t teach an old dog new tricks’ literally.

SAT, LSAT? No problem

In a casual conversation, the distinction between GPT-3. 5 and GPT-4 can be subtle. The difference comes out when the complexity of the task reaches a sufficient threshold – GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3. 5.

OpenAI evaluated the two models against a number of benchmarks to understand the differences between them, including by simulating exams that were initially created for humans, and reported that ChatGPT-4 outperforms its previous version.

The company said that ChatGPT-4 also scored higher than the average student in every subject except torts in a bar exam (see box) that requires in-depth legal knowledge, reading comprehension, and writing skills, thus showing that large language models are capable of attaining the same level of proficiency as human attorneys in almost all US jurisdictions.

Scope for misuse

Cybersecurity: It’s actually alarming that ChatGPT can produce spyware. Not because malware is something new, but since ChatGPT can perform this function indefinitely. Anyone could use the app to generate malware code and then modify that code to make it tougher to detect or stop.

Phishing and scamming: The ideal AI writing tool for phishing would be ChatGPT. The broken language in phishing messages is what makes them often easy to spot, but ChatGPT can fix all of that. This is a fairly obvious use case for the app.

Cheating in schools/colleges: Though more likely to happen, this may be less terrifying. A tool that can be used to create text about anything is essentially perfect for a student trying to cheat in class and makes it challenging for professors to really discover someone doing that.

Taking over content writer jobs: Is it plagiarism if someone asks ChatGPT towrite a book on a particular topic and then publishes it? While the debate continues, anybody can just use a prompt and get content according to their use case.

Fooling recruiters: According to a consultancy firm, ChatGPT outperformed humans at writing applications by 80%. It’s quite simple to understand how ChatGPT would effectively target all the essential phrases that will appeal to hiring managers or, more likely, get past the filters used by HR software.

#ChatGPT #improving #perfect